Embedded Plant Disease Recognition Using Deep PlantNet on FPGA-SoC

keywords: FPGA, deep CNN, co-design, hardware acceleration, PYNQ-Z1

Technological breakthroughs have ushered in a revolution in a variety of industries, including agriculture, during the last several decades. This has given rise to what is now known as Agriculture 4.0, which emphasizes strategy and systems rather than the traditional obligations of the past. As a result, many human procedures have been replaced by a new generation of intelligent devices. Crop production management in Agriculture 4.0, on the other hand, poses a considerable challenge, particularly when it comes to prompt and accurate crop disease identification. Plant diseases are of special significance since they significantly reduce agricultural yield in terms of both quality and quantity. Deep learning neural network models are being utilized for early diagnosis of plant diseases in order to overcome this difficulty. These models can automatically extract features, generate high-dimensional features from low-dimensional ones, and achieve better learning results. In this research, we offer a joint solution involving image processing, phytopathology, and embedded platforms that intends to minimize the time necessary for human labor by leveraging AI to facilitate plant disease detection. We propose a learning-based PlantNet architecture for detecting plant diseases from leaf images, in which achieved about 97 % accuracy and about 0.27 loss on the PlantVillage dataset. However, because putting AI techniques on embedded systems can substantially cut energy consumption and processing times while also minimizing the costs and dangers involved with data transfer, it is worth considering. The second goal of this paper is to use high-level synthesis to accelerate the proposed PlantNet architecture. Moreover, we propose a hardware-software (HW/SW) design for integrating the suggested vision system on an embedded FPGA-SoC platform. Finally, we present a comparative study with state-of-the-art works, which demonstrates that the proposed design outperforms the others in terms of normalized GFLOPS (1.93), reduced power consumption (2.48 W), and minimum required processing time (0.04 seconds).

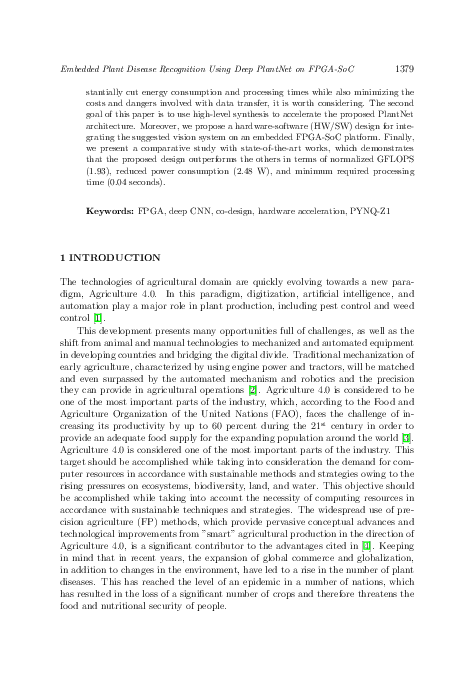

reference: Vol. 42, 2023, No. 6, pp. 1378–1403